Receptive Fields Selection for Binary Feature Description

Bin Fan*, Qingqun Kong*, Tomasz Trzcinski+, Zhiheng Wang$, Chunhong Pan*, and Pascal Fua+

*National Laboratory of Pattern Recognition (NLPR), Institute of Automation, Chinese Academy of Sciences, China

+CVLab, EPFL, Switzerland

$Henan Polytechnic University, China

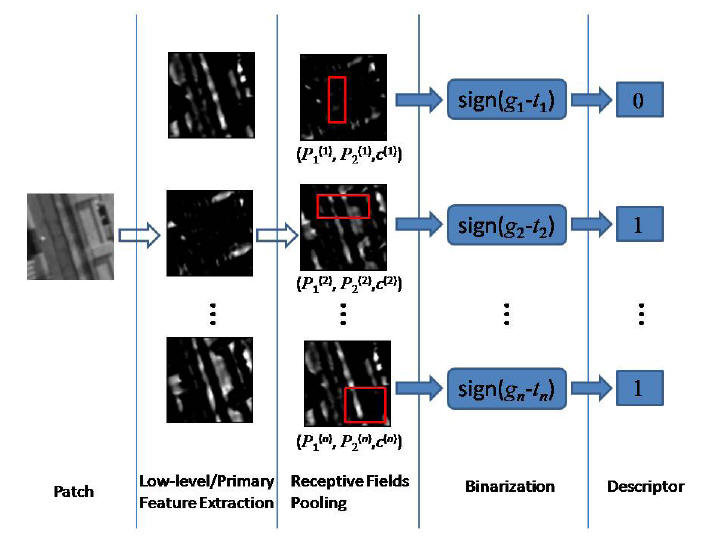

Procedure of binary feature construction

Visualization of the learned receptive fields

This paper proposes a novel data-driven method for designing binary feature descriptor, which we call Receptive Fields Descriptor (RFD). Technically, RFD is constructed by thresholding responses of a set of receptive fields, which are selected from a large number of candidates according to their distinctiveness and correlations in a greedy way. By using two different kinds of receptive fields (namely Rectangular pooling area and Gaussian pooling area) for selection, we obtain two binary descriptors RFDR and RFDG accordingly. The construction procedure is summarized in the top left figure.

The proposed binary descriptors significantly outperform the state-of-the-art binary descriptors (BinBoost, BGM, BRIEF etc.) in the context of image matching on two benchmark datasets (Patch Dataset and Oxford Dataset), while performing similarly to the best floating-point descriptors at a fraction of processing time. What is more, object recognition experiments on two benchmarks (ZuBuD Dataset and Kentucky Dataset) further validate the effectiveness of the proposed method as well as its generalization ability.

From the visualization (top right figure), we can find that the selected receptive fields concentrate highly on the central part of the patch, indicating that central pixels are more important than those far from center. This is consist to previous work. Interestingly, the selected rectangular receptive fields exhibit similarity to the Gaussian weighting strategy used in SIFT, while the selected Gaussian receptive fields exhibit similarity to the pooling layout of DAISY. This may explain why RFDG is better than RFDR since previous work has found that the DAISY like spatial pooling are more efficient. The rightmost column in the top right figure gives the first 8 selected receptive fields trained by Yosemite.

Paper

Bin Fan, et al. Receptive Fields Selection for Binary Feature Description, IEEE Transaction on Image Processing, 23(6): 2583-2595, 2014

Software

Download: the c++ code is available on the OpenPR webpage, code(c++, zip, 5.9M)

Comparative results on the Brown's Dataset. The results are reported in terms of false positive rate at 95% recall. For learning based descriptors, we give the results for the two training datasets per testing dataset, while for those descriptors that do not depend on the training data, we write results per each testing dataset. In parentheses, we give the number of bits used to encode them. We assume 1 byte (8 bits) per dimension for floating-point descriptors since this quantization was reported as sufficient for SIFT in literature. RFD significantly outperforms its binary competitors and performs comparably to the best floating-point descriptors.

| Train | Yosemite | Notre Dame | Yosemite | Liberty | Notre Dame | Liberty |

| Test | Liberty | Notre Dame | Yosemite | |||

| Binary Descriptors | ||||||

| RFDR | 19.40 (598) | 19.35 (446) | 11.68 (598) | 13.23 (293) | 14.50 (446) | 16.99 (293) |

| RFDG | 19.03 (563) | 17.77 (542) | 11.37 (563) | 12.49 (406) | 15.14 (542) | 17.62 (406) |

| BGM | 22.18 (256) | 21.62 (256) | 14.69 (256) | 15.99 (256) | 18.42 (256) | 21.11 (256) |

| BinBoost | 21.67 (64) | 20.49 (64) | 14.54 (64) | 16.90 (64) | 18.97 (64) | 22.88 (64) |

| SIFT-KSH | 44.87 (128) | 44.71 (128) | 35.73 (128) | 34.84 (128) | 37.59 (128) | 36.31 (128) |

| BRIEF | 54.01 (512) | 48.64 (512) | 52.69 (512) | |||

| FREAK | 58.14 (512) | 50.62 (512) | 52.95 (512) | |||

| Floating-point Descriptors | ||||||

| SIFT | 32.46 (1024) | 26.44 (1024) | 30.84 (1024) | |||

| CSLBP | 33.87 (2048) | 28.92 (2048) | 39.75 (1024) | |||

| L-BGM | 21.03 (512) | 18.05 (512) | 13.73 (512) | 14.15 (512) | 15.86 (512) | 19.63 (512) |

| Brown et al. | 18.27 (232) | 16.85 (288) | 11.98 (232) | - | 13.55 (288) | - |

| Simonyan et al. | 17.44 (232) | 14.51 (288) | 9.67 (232) | - | 12.54 (288) | - |

Note: 1. With the normalization method for gradient orientation maps proposed in Simonyan's paper, our method can be further improved 1~2%.

2. In Simonyan et al.'s TPAMI paper, the results are a litter different from here, which are from their ECCV paper.

Oxford Dataset

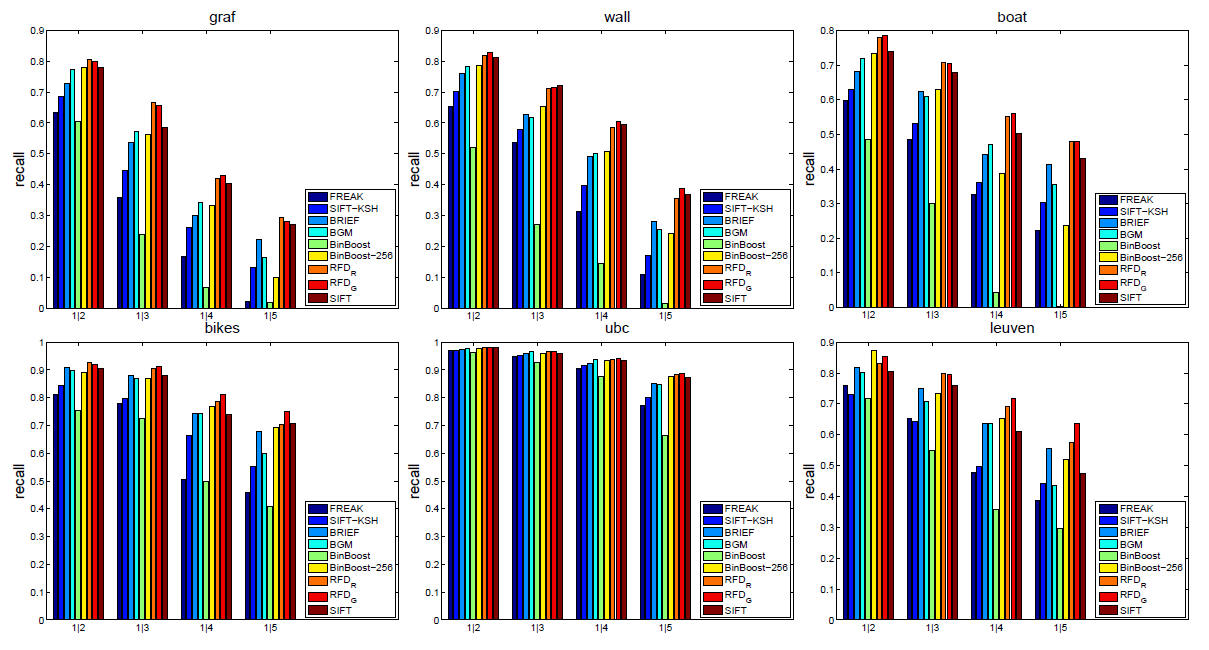

Recall when the precision is 80% for six image sequences in the Oxford dataset.

Note that for a fair comparison, all the descriptors are build on the same interest regions (including rotation), which are detected by Hessian-Affine detector.

Object Recognition

Object recognition accuracies. For a fair comparison, all the descriptors are build on the same interest regions (including rotation), which are detected by Hessian-Affine detector.

|

|

SIFT |

FREAK |

BRIEF |

SIFT-KSH |

BGM |

BinBoost |

BinBoost-256 |

RFDR |

RFDG |

|

ZuBuD |

75.5% |

48.8% |

70.5% |

64.6% |

67.3% |

62.3% |

78.6% |

80.7% |

82.5% |

|

Kentucky |

48.2% |

21.9% |

41.6% |

29.8% |

36.3% |

19.2% |

50.6% |

62.5% |

65.1% |

References

BGM/LBGM: T. Trzcinski, M. Christoudias, V. Lepetit, and P. Fua. "Learning image descriptors with the boosting-trick", NIPS 2012.

BinBoost: T. Trzcinski, M. Christoudias, P. Fua, and V. Lepetit. "Boosting binary keypoint descriptors", CVPR 2013.

SIFT-KSH: W. Liu, J. Wang, R. Ji, Y.-G. Jiang, and S.-F. Chang. "Supervised hashing with kernels", CVPR 2012.

BRIEF: M. Calonder, V. Lepetit, M. Ozuysal, T. Trzcinski, C. Strecha, and P. Fua. "BRIEF: Computing a local binary descriptor very fast", TPAMI 2012.

FREAK: A. Alahi, R. Ortiz, and P. Vandergheynst. "FREAK: Fast retina keypoint", CVPR 2012.

SIFT: D. Lowe. "Distinctive image features from scale invariant keypoints", IJCV 2004.

CSLBP: M. Heikkila, M. Pietikainen, and C. Schmid. "Descrption of interest regions with local binary patterns", PR 2009.

Brown et al.: M. Brown, G. Hua, and S. Winder. "Discriminative learning of local image descriptors", TPAMI 2011.

Simonyan et al.: K. Simonyan, A. Vedaldi, and A. Zisserman. "Descriptor learning using convex optimisation", ECCV 2012.

Bin Fan, bfan@nlpr.ia.ac.cn